Towards Understanding of Engagement in Human-Robot Interaction

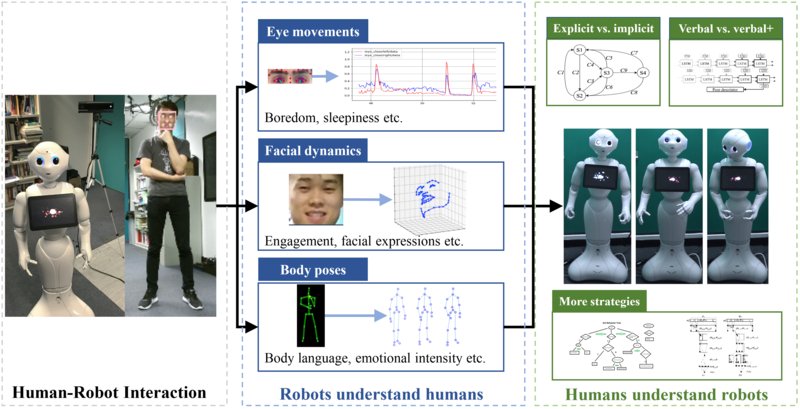

How can a robot understand its human partners' engagement during Human-Robot Interaction (HRI)? One of the most straightforward and effective ways is through its eyes, i.e., the cameras. The HKUST HCI Initiative thus conducted a series of HRI research to equip robots with the ability to visually perceive human status on different scales, from eyes, faces and to body poses.

In natural human-human interactions, people make eye contacts from time to time to deliver very rich information to each other, e.g., attention, interest, etc. HCI Initiative studied how to estimate the level of boredom/sleepiness - attentional engagement - of participants in real-time HRI by temporal modeling of typical eye-related movements, i.e., eyelid and eyeball motions.

Compared to eyes, faces provide more fine-grained information of one's shifts of attentional engagement. For an interactive robot, it needs to constantly check whether participants are facing towards it and whether they are still engaged with current interactions. HCI Initiative proposed an approach to sense participants' engagement transitions by modeling the dynamics of their face orientations as well as other social signals. Through face modeling, a robot could effectively detect engagement changes when the participants get distracted by peripheral devices.

In addition to attentional engagement, faces are also highly indicative of affective engagement in interactions. In order to be emotionally intelligent, a robot should also be sensitive to such status. Many studies have used facial movements to recognize the type of emotional expressions. However, even when the same affect is shown, its intensity may fluctuate over time. HCI Initiative intends to capture the largely overlooked intensity of emotional engagement from rich gesture and body posture expressions. Thus, a model to combine body poses and faces is developed to help a robot to "feel" the subtle emotional fluctuations of its human partners throughout their interaction. The model has been tested empirically and the robot appeared to be more sensitive to changes in human emotion and thus perceived to be more emotionally intelligent.

Besides being able to infer human engagement, a robot should also ensure that its human partners can understand the robot itself. A robot is still far from embracing "humanness" in interactions if its movements or motions are unreadable and/or unpredictable for human beings. How can a robot effectively express its intents, its engagement or even its "smartness" via movements and motions so that it can be understood and trusted by human partners? To this end, HCI Initiative developed a set of rule-based robot action strategies.

In one user study, a robot was designed to behave either explicitly or implicitly when trying to deliver its intents. For example, when the robot detects the engagement shifts of human beings, it would react with pauses in the implicit mode, or it would speak out directly in the explicit mode. Results show that the participants always appreciated when a robot could acknowledge their engagement shift.

A second user study investigated how a robot should respond to its partner's affective fluctuations. The robot was equipped with two styles of emotional responses: it can react by speech only (verbal) or it can use speech together with expressive gestures/postures (verbal+). Results suggest that verbal responses are sufficient in some occasions while gesture/postures are more effective for robots to show empathy. By applying these reaction strategies, robots are more understandable in simple interaction scenarios. HCI Initiative is exploring more complex and interesting practical applications, by exploiting more rigorous mathematical modeling and more effective algorithms.

Facebook

Facebook LinkedIn

LinkedIn Instagram

Instagram YouTube

YouTube Contact Us

Contact Us